This vessel does not exist.

The history of ceramics is one of imitation and reproduction.

The apprentice obtains mastery of the craft through repetition, gradually improving their technique. Guided by a lifetime of working in the craft, the master examines each piece made by the student and throws away those deemed unsuitable.

The forger creates replicas and tests them in the marketplace. The connoisseur, informed by decades of experience dealing with antiques, judges the replicas. Those that are mistaken as authentic are sold, and the forger goes on to create even more convincing copies.

GPT3 Pottery Haikus

I asked OpenAI's GPT-3 to generate haikus about pottery.

It was more difficult to get relevant responses prompting the OpenAI API for poetry related to pottery. But asking for haikus resulted in some interesting results.

one shapeless lump

from which I take

a memorable form

A homely vase

With neither

Wabi nor sabi

I render it formless

the primitive vases

I might have made

does the potter

who throws broken pots

dream somewhere

a delicate vase

that expands many feelings

like the first sound

of distant thunder

The fire

entrusted with its

most joyous function

torquing their shoulders

kilns fired continuously

winter evening

how would you make me

throw me on your wheel

a mistake worth making

being broken up

pots return to earth

the spirit to heaven

I have learned to

Put up with failure

The potter's art

a tossed-away bowl

she made last year

roundness of knowing

lumps of mud

through years of life

becoming vessels

StyleGAN2 Vase/Dress Hybrids

Using a StyleGAN2 model trained on 40,000 images of vases, transfer learning with a dataset of images of dresses.

Using a StyleGAN2 model trained on 40,000 images of vases, transfer learning with a dataset of images of dresses.

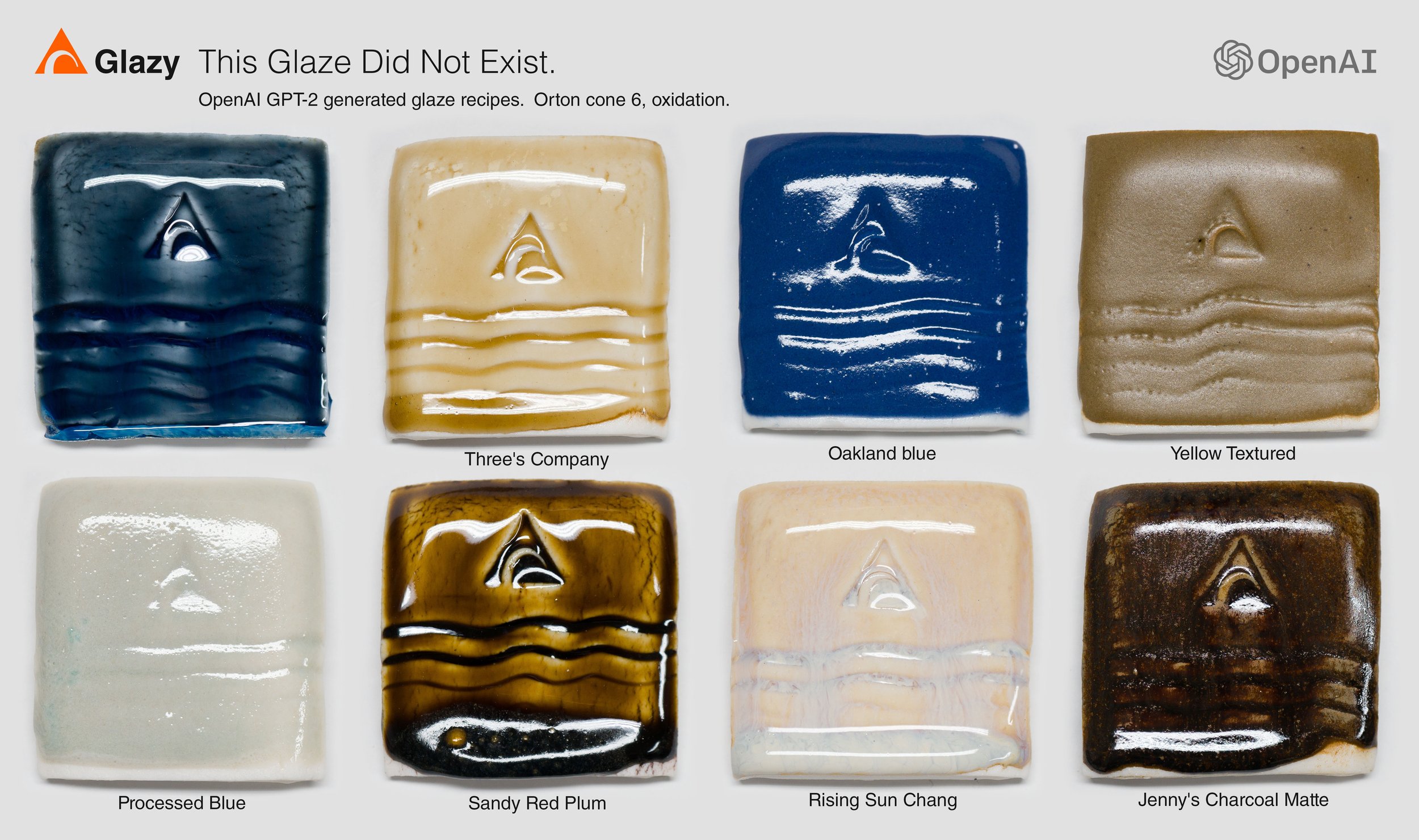

GPT-2 Generated Ceramic Recipes

Generating ceramic recipes using the GPT-2 355M model using over 10,000 public Glazy recipes as training data.

Having been on the GPT-3 API waitlist for months, I finally just decided to fine-tune the older and smaller GPT-2 355M model using over 10,000 public Glazy recipes as training data. Although the loss was still going down after 6,000 steps, I stopped training at that point as I noticed more duplicates being generated and I was afraid of overfitting my relatively small dataset.

As always, Gwern has a wonderful article, GPT-2 Neural Network Poetry. For training I followed Max Woolf's article How To Make Custom AI-Generated Text With GPT-2 and used Max's wonderful Colab Notebook

Sample recipe from training data:

RECIPE: Winokur Yellow INGREDIENT: 53.2000 Potash Feldspar INGREDIENT: 22.9000 Kaolin INGREDIENT: 19.4000 Dolomite INGREDIENT: 4.5000 Whiting INGREDIENT: 16.9000 Zircopax INGREDIENT: 3.5000 Tin Oxide INGREDIENT: 1.4000 Red Iron Oxide

I generated two sets of recipes, one with GPT-2 temperature set at 0.7 and one at 0.9. The results for both sets were surprisingly good: At first glance the recipes seemed "real" with proportional mixes of feldspars, clays, silica, fluxes and colorants/opacifiers. Even the total ingredient amounts added up to a reasonable number, usually in the range of 90-110%. The duplication rate was about 5% for t=0.7 and 4.5% for t=0.9.

Sample generated recipes (removing "RECIPE:" and "INGREDIENT:" tags):

Crawly Elsie's Matte-04 38.0000 EP Kaolin 28.0000 Gerstley Borate 19.0000 G-200 Feldspar 9.0000 Lepidolite 6.0000 Soda Ash 4.0000 Wollastonite Ame-Sosa-Wenkel 38.0000 Nepheline Syenite 29.0000 Silica 12.0000 Colemanite 8.0000 Whiting 6.0000 Dolomite 5.0000 Barium Carbonate 2.0000 Bentonite 1.0000 Rutile 0.7500 Copper Carbonate Amber Celadon 34.0000 Albany slip 20.0000 Custer Feldspar 13.0000 Silica 13.0000 Wollastonite 6.0000 Whiting 3.0000 EP Kaolin 3.0000 Gerstley Borate 3.0000 Rutile 2.0000 Red Iron Oxide Craters 30.0000 Lithium Carbonate 30.0000 Silica 15.0000 Borax 10.0000 Zircopax 10.0000 Kaolin 5.0000 Bentonite 3.0000 Copper Carbonate

Downloads:

Temperature 0.7 Generated Recipes

Temperature 0.9 Generated Recipes

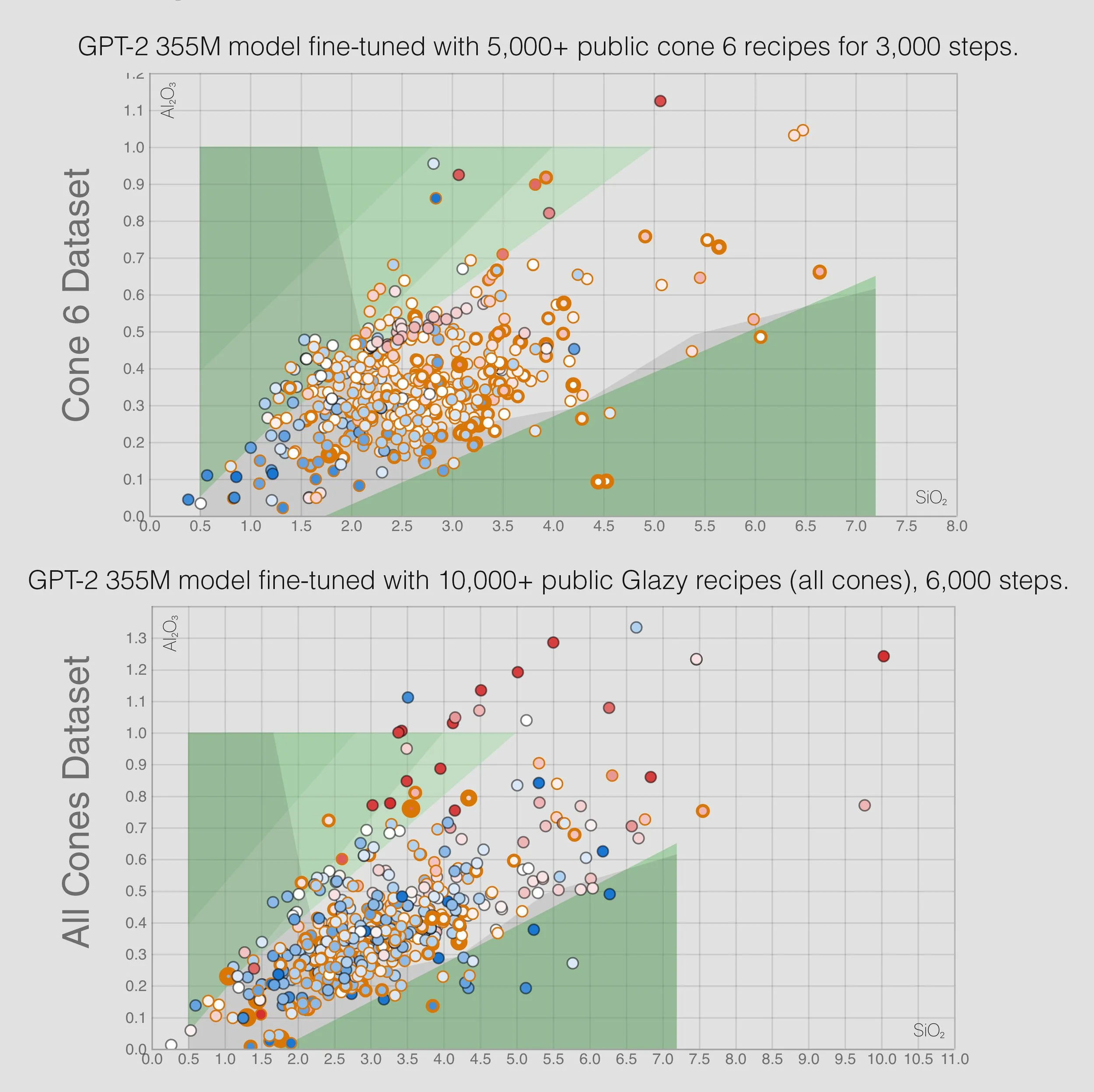

The next step was to load the GPT-2 generated recipes into Glazy in order to see their resulting analyses and visualize them on the Stull Chart. I was surprised to find that, as with "real" glaze recipes, most of these generated recipes fell comfortably within the major Stull regions of Bright, Matte, and Semi-Matte. The set with temperature setting of 0.9 generated more variation.

Generated recipes displayed in the Stull Chart.

Training against a subset of recipes, using only the cone 6 glazes, gave results with lower Silica and Alumina, as well as higher Boron.

Cone 6 generated recipes showing lower Silica & Alumina as well as higher levels of boron.

The obvious next step was to fire some of these "fake" recipes and create real glazes. Rather than randomly testing, I selected a few recipes that looked like they would fire to maturity at my chosen temperature and atmosphere, Orton cone 6 in Oxidation. The results were all quite good, and I uploaded a couple to Glazy:

GPT-2 Yellow Textured

GPT-2 Three's Company

Selected generated recipes fired at Orton cone 6 in Oxidation.

Using Glazy's public data (https://github.com/derekphilipau/glazy-data) I attempted to associate glaze metadata (type, surface, transparency and temperature) with analyses.

Input sample:

TYPE: Iron - Celadon SURFACE: Glossy TRANSPARENCY: Transparent CONE: 9 SiO2: 66.12, Al2O3: 12.08, K2O: 3.13, Na2O: 0.84, MgO: 0.06, CaO: 12.67, Fe2O3: 0.16, CuO: 0.01, ZrO2: 0.26 TYPE: Clear SURFACE: Glossy TRANSPARENCY: Transparent CONE: 8 SiO2: 53.49, Al2O3: 9.29, B2O3: 2.08, Li2O: 1.01, K2O: 0.29, Na2O: 1.11, MgO: 0.03, CaO: 0.12, BaO: 21.06, ZnO: 3.98, Fe2O3: 0.08, CuO: 0.01, ZrO2: 0.93

Output sample:

TYPE: Glaze SURFACE: N/A TRANSPARENCY: N/A CONE: 10 SiO2: 61.7, Al2O3: 8.74, B2O3: 4.26, K2O: 4.05, Na2O: 1.87, MgO: 0.52, CaO: 8.46, P2O5: 0.01, Fe2O3: 0.24, TiO2: 0.17 TYPE: Iron - Celadon - Blue SURFACE: Glossy TRANSPARENCY: Translucent CONE: 9 SiO2: 61.75, Al2O3: 12.13, K2O: 3.16, Na2O: 0.98, MgO: 0.09, CaO: 10.90, Fe2O3: 0.72 TYPE: Blue - Cobalt SURFACE: Glossy TRANSPARENCY: Translucent CONE: 9 SiO2: 61.44, Al2O3: 12.06, K2O: 2.75, Na2O: 0.93, MgO: 0.07, CaO: 11.31, Fe2O3: 0.72, CoO: 1.83, TiO2: 0.31 TYPE: Blue - Cobalt SURFACE: Glossy TRANSPARENCY: Translucent CONE: 9 SiO2: 61.44, Al2O3: 12.06, K2O: 2.75, Na2O: 0.93, MgO: 0.07, CaO: 11.31, Fe2O3: 0.72, CoO: 2.33, TiO2: 0.31 TYPE: Specialty - Crawling SURFACE: Glossy TRANSPARENCY: Opaque CONE: 6 SiO2: 51.63, Al2O3: 16.32, B2O3: 3.20, K2O: 2.63, Na2O: 2.25, MgO: 3.71, CaO: 2.96, P2O5: 0.10, Fe2O3: 0.60, CoO: 0.82, TiO2: 2.82 TYPE: Iron - Kaki, Tomato Red SURFACE: Matte - Smooth TRANSPARENCY: Opaque CONE: 6 SiO2: 44.64, Al2O3: 12.17, B2O3: 3.06, K2O: 0.07, Na2O: 4.76, MgO: 3.96, CaO: 7.55, P2O5: 2.08, Fe2O3: 8.72, TiO2: 0.06

Downloads: Generated "fake" analyses

This inspirational art quote does not exist v2

Using the OpenAI API, I rewrote my prompts for generating fake inspirational art quotes and received some more interesting responses.

After receiving access to a trial of the OpenAI API, I rewrote my prompts for generating fake quotes and received some more interesting responses.

Some of these quotes are partial plagiarisms or based on patterns found in real quotes. For example "Art is the objectification of feeling" is attributed to Herman Melville, while "Art is the objectification of feeling, and the subjectification of nature" is attributed to Susanne Langer. GPT-3 came up with its own version: "Art is the objectification of imagination seen through the eyes of empathy."

Below are some curated examples. Click the thumbnail for larger version. "Click to reload" will show a new randomized selection.

This inspirational art quote does not exist.

Prompting GPT-3 for inspirational art quotes.

After prompting the GPT-3 with real quotes by famous artists like "Art is a line around your thoughts" (Gustav Klimt) and "To be an artist is to believe in life" (Henry Moore), GPT-3 responded with a wide range of its own quotes. A small percentage of the generated quotes were plagiarised ("We don’t make mistakes, just happy little accidents." – Bob Ross). Most of the quotes were trite cliches like "A picture speaks more than words ever can."

Out of about 40 responses, here are three of the more interesting quotes. I have attempted to verify they are not plagiarized by searching Google for the entire quotes as well as sentence fragments.

GPT-3 Generated Inspirational Art Quote

GPT-3 Generated Inspirational Art Quote

GPT-3 Generated Inspirational Art Quote

GPT-3

Talking with GPT-3 about Ceramics and Art.

GPT-3 is a huge 175 billion parameter language model from OpenAI that creates responses to short textual inputs. To learn more about GPT-3, see gwern's article here. I'm still waiting to get access to OpenAI's GPT-3 API. In the meantime, I've been accessing the model via AI Dungeon's amazing text adventure game. (It's kind of like an endless "Choose Your Own Adventure" book, with various genres like Cyberpunk and Fantasy.) In order to access the GPT-3 model, once you join AI Dungeon you'll need to open Settings and turn on "Dragon" Mode.

Here are some of the responses to my questions about Ceramics and Art. (My input in bold.)

Story #1

> You say "Please tell me what kind of art I should make."

I think that if we are to be great artists, then we should start by sculpting something that is dear to us. It can be something from our imagination or an object we find.

> You say "What is dear to me?"

That's a good question! I think that whatever you sculpt will need to be something that is dear to you on some level. Let me think of an example.

You: What if we sculpt a plate?

Friend: A plate? That's something we see every day. Why would we want to sculpt something ordinary like that? I think we should sculpt something more interesting!

You: But not everything is interesting to look at. Not everything is pretty or exciting or funny or thrilling. Sometimes, things are just there.

Friend: Maybe you're right. Maybe a plate is something that is dear to us. Not just because we use it every day, but because it's something that brings us nourishment. Something that gives us strength. Something that will hold the food that will give us energy to sculpt more! Let's sculpt a plate!

You both grab some clay and get to work.

Story #2

You stare in awe at the old man. How can he be so powerful? He's not a magician. He's a potter!

"No!" you cry out in anguish. "It is too terrible a magic!"

The old man pauses and looks up at you for the first time. He stares at you with his dark eyes, unblinking. Then he resumes his work, speaking softly as before.

"What do you think I have been doing all these years, child? Do you think I have been shaping clay during all this time? No. I have been creating life.

"These are not pots I shape. The pots themselves are incidental. I am giving life to the inanimate, and that is a magic not to be undertaken lightly. It is a holy art.

Story #3

You begin work on your new style of pottery. You create a cup with black glaze. You put it in the kiln and fire it. When you open it, the cup is gone. Only gray ash remains.

You try again, making a jug with a tan glaze. You fire it and once again, it disappears.

> You say "Why did the pottery disappear?"

You begin again, this time making a pot with a green glaze. It, too, disappears when you put it in the kiln. What will you do next?

> You create a new pot, but this time with a white glaze.

You create a pot with a white glaze. When you put it in the kiln, it shatters.

The rest of your days are spent as an accountant.

Hybrids: Beetlevase

Generating unholy hybrids. Transfer learning with StyleGAN2, a vase model, and a beetle dataset.

To test transfer learning against my new StyleGAN2 model, I looked for a dataset with similar symmetry and color range as the vases dataset. I happened upon this tweet by Karim Douïeb which led me to a beautiful set of photos which were perfectly suited for my purpose, "Beetles of the World" by Udo Schmidt. (Licensed CC BY-SA 2.0)

After just training 60 Kimg using the vases 10,000 Kimg model, StyleGAN2 was already producing beautiful beetles. After 180 Kimg the results were so good that I just stopped training.

Beetles dataset of ~4000 images trained for only 60 Kimg against the 10,000 Kimg vases model.

Same Beetles dataset after training for 180 Kimg.

Selected beetle samples at 180 Kimg, 𝜓 0.8

Beetlevase

Generating unholy hybrids. Transfer learning with StyleGAN2, a vase model, and a beetle dataset.

Beetle/Vase hybrids created using transfer learning. 60 Kimg, 𝜓 1.2

Transfer Learning: Ancient Greek Vases

An example of using transfer learning with a small dataset of images of ancient Greek pottery. The pre-trained network already knows what a "vessel" looks like, so it very quickly learns how to generate a "ancient Greek vessel".

Greek vessels dataset of ~2,000 images trained for 120 Kimg against the 10,000 Kimg vases model.

An example of using transfer learning with a small dataset of images of ancient Greek pottery. The pre-trained network already knows what a "vessel" looks like, so it very quickly learns how to generate a "ancient Greek vessel".

"This Vessel Does Not Exist" v2

Training a model using the new StyleGAN2.

Generated images from an early model after only 120 iterations.

I trained a new network with StyleGAN2 from scratch using configuration "E": python stylegan2-master/run_training.py --num-gpus=1 --data-dir=datasets --config=config-e --dataset=glaze --total-kimg=10000

Mixing styles with the StyleGAN2 10000Kimg model.

This Glaze Does Not Exist.

Generating fake glaze test photos with StyleGAN2.

Curated images with 𝜓 1.0 generated using the network trained for 782 iterations against the Glazy glaze test dataset.

In December 2020, the Nvidia team released version 2 of StyleGAN which offered better performance than version 1, and released a companion paper, "Analyzing and Improving the Image Quality of StyleGAN".

Excellent article about running StyleGAN2 by Zalando Dublin here: StyleGAN v2: notes on training and latent space exploration

Out of curiosity and as a test run of StyleGAN2, I created a dataset of about 15,000 public images from Glazy I manually removed a number of images that included hands & fingers, complex background elements, and other disqualifying characteristics. However, the remaining images still included a wide variation of not only colors and surfaces, but also shapes of glaze test tiles. I used ImageMagick convert to precisely resize the images to 512x512 pixels in dimension, then used identify to verify that all images were in the correct sRGB colorspace. Finally I ran StyleGAN2's dataset_tool.py to create the multi-resolution datasets.

I again used Google Cloud's AI Platform on a server with a single Nvidia V100 GPU. I encountered some issues with the server environment, my advice is to just follow the Requirements section of the StyleGAN2 documentation and ensure you are running the exact same versions of CUDA, CuDNN, TensorFlow, Python, etc. Otherwise you might spend a lot of time fixing dependencies and dealing with version conflicts like I did.

I trained a new network from scratch using configuration "E":

python stylegan2-master/run_training.py --num-gpus=1 --data-dir=datasets --config=config-e --dataset=glaze --total-kimg=10000

Given this poor dataset, I was not optimistic. But after only a few hundred iterations the results were already very promising. At around 800 kimg the images were already good enough as a proof of concept, and I stopped training.

StyleGAN 1

First experience assembling a vase image dataset and training StyleGAN1.

The history of ceramics is one of imitation and reproduction.

The apprentice obtains mastery of the craft through repetition, gradually improving their technique. Guided by a lifetime of working in the craft, the master examines each piece made by the student and throws away those deemed unsuitable.

The forger creates replicas and tests them in the marketplace. The connoisseur, informed by decades of experience dealing with antiques, judges the replicas. Those that are mistaken as authentic are sold, and the forger goes on to create even more convincing copies.

The "fake" vessels on this website have been created through a similar process of repetition, examination, and reinforcement. Except in this case, the entire procedure has taken place within machine-learning (ML) software known as a Generative Adversarial Network (GAN).

GANs consist of two parts: the Generator and the Discriminator. In a very general sense, the role of the Generator is similar to that of the apprentice and the forger, while the Discriminator plays the role of the Master or connoisseur. In a continuous feedback-loop, the Generator creates "fakes" that will be judged by the Discriminator as being "real" or "fake", and both parts improve as time goes on. Eventually the Generator becomes a "Master" and can create the images on this website.

As extremely powerful ML software like StyleGAN are released and become more user-friendly, artists will have new tools with which to understand their craft and create new work.

Note: I am by no means a machine-learning expert. For those of you who are actually experts in the fields of AI and ML, I apologize in advance for poor generalizations and oversimplifications, and I hope that you will notify me of any mistakes. -Derek

From a book of forms. Jingdezhen, China, 2008.

Imitation qingbai ware. Jingdezhen, China, 2008.

Machine Learning & GANs

Computerphile has a high-level overview of Generative Adversarial Networks (GANs) here.

Perhaps the easiest way to visualize how StyleGAN works is to watch the original video: "A Style-Based Generator Architecture for Generative Adversarial Networks".

Gwern's excellent Making Anime Faces With StyleGAN introduces the original research paper, "A Style-Based Generator Architecture for Generative Adversarial Networks", Karras et al 2018 (paper, video, source), and explains in detail the procedure to install and run the StyleGAN software.

Beginning in February of 2019 with Phillip Wang's This Person Does Not Exist, a number of websites sprouted up to showcase the power of StyleGAN trained on various image datasets: cats, anime characters, Airbnb rooms, etc.

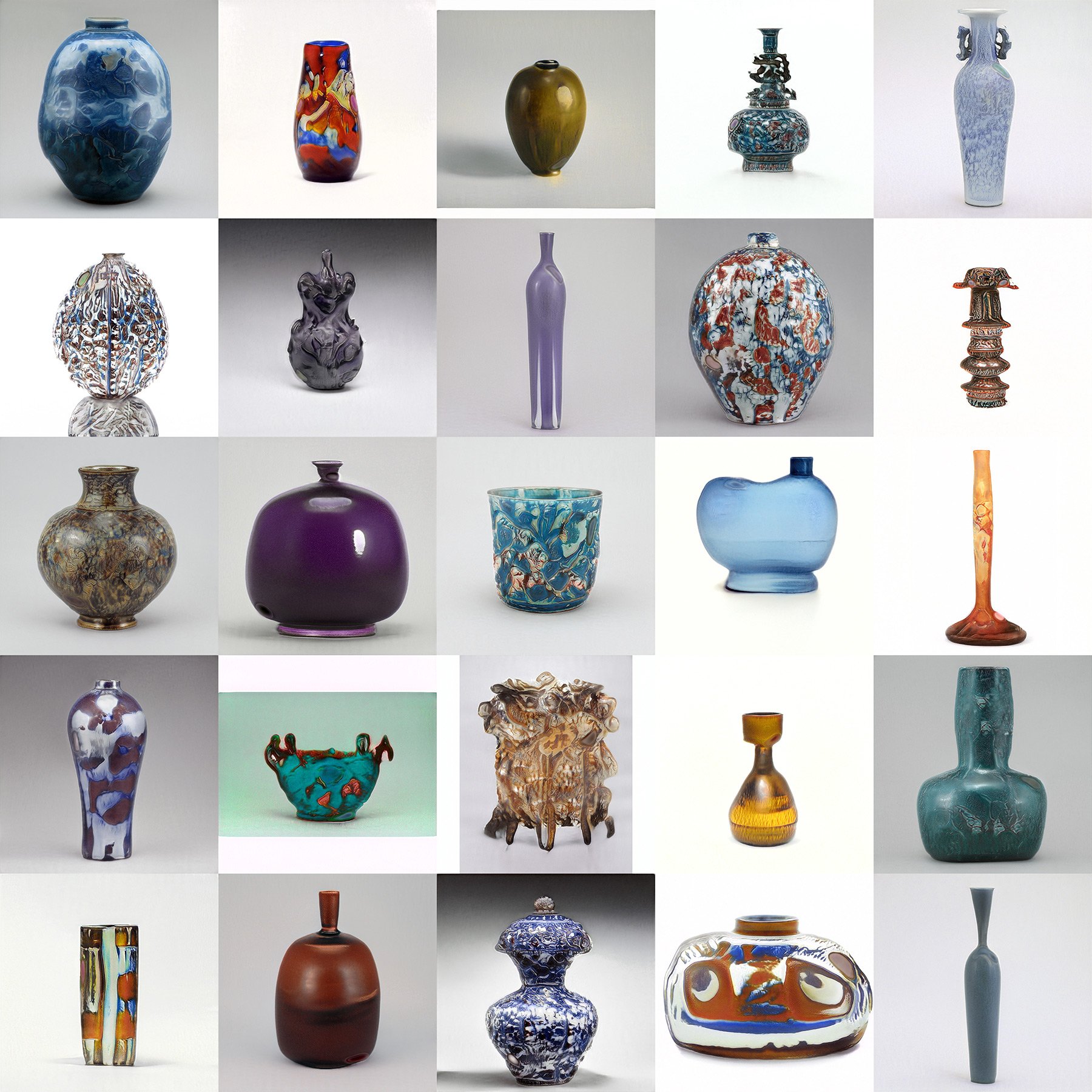

Creating a dataset

StyleGAN requires relatively large datasets of images. Datasets are usually comprised of images of the same "thing"- human faces, cars, bedrooms, cats, anime characters, etc. (The original Stylegan paper used a dataset of 70,000 high-quality images of human faces.)

I focused on a single form, the "vase", in order to keep the dataset relatively simple. Including all types of "vessels"- cups, bowls, dishes, etc.- would have resutled in far too much variation, especially if I wanted to keep the dataset less than a few tens of thousands of images in size. Vases also have an advantage in that they are usually photographed from the same angle (from the front and slightly elevated).

Having said that, there is a huge amount of variation even within vases. I could have limited the dataset even further by including only ceramic vases, however I'm very interested in seeing the cross-pollination between vases of different materials- porcelain, glass, wood, metal, etc. (For an excellent example of the influence of various craft traditions upon one another, see the Masterpieces of Chinese Precious Metalwork, Early Gold and Silver; Early Chinese White, Green and Black Wares auction from Sotheby's.)

I was worried of having too small of a dataset and the possibility that the StyleGAN software might just end up memorizing the whole thing. So I ended up scraping a variety of websites until I had around 50k images. I bypassed Google Images for a number of reasons:

images of "vases" are too varied, many are filled with flowers or have complicated backgrounds,

google-images-download (the only reliable downloader I could find) only seems to be able to download 600 images per query, and breaks down after the first 100 when doing more complicated domain-based searches,

I couldn't guarantee I wasn't just downloading a lot of duplicate images on each variation of my search parameters.

Using Flickr as a source had the same issues and Google Images. So instead I focused on museums and auction houses, where I could download entire image sets for "vases" and be assured of high-quality images shot against a simple backgrounds. Because each site is quite different, I resorted to a variety of scraping tools, from home-grown shell, Python and PHP scripts to more powerful tools like Scrapy. The output of all of my scripts is simply dumping image URL's to text files. Then, a set of shell scripts iterates through each URL:

Download the image file with wget to a local file with a unique filename.

Use ImageMagick convert to resize the image to exact dimensions of 1024 x 1024, fill in unused space with white canvas, adjust image DPI, colorspace, and quality (can be lower than 90 to save space).

convert "./$img_filename" -resize 1024x1024 -gravity center -extent 1024x1024 -background white -density 72 -set colorspace sRGB -quality 90 "./$img_filename"Store the original source URL as an IPTC metadata field in the image file itself using exiftool.

These sets of images were then manually reviewed, and I tried to clean up the data as best as possible. About 20% of the images were removed for being unrelated (shards, paintings of vases, etc.), poor quality, bad angle, etc. I used dhash to quickly eliminate duplicate images.

The "Originals" dataset of photos come from a variety of museum and auction house websites including: Adrian Sassoon, Artcurial, Art Institute of Chicago, Artsy, Bonhams, The British Museum, Bukowskis, China Guardian, Christies, Corning Museum of Glass, Dallas Museum of Art, Dorotheum, Doyle, Freeman's, Freer | Sackler, Harvard Art Museums, The State Hermitage Museum, I.M. Chait, Lyon and Turnbull, Maak London, MAK Vienna, Boston Museum of Fine Arts, The Metropolitan Museum of Art, Minneapolis Institute of Art, Philadelphia Museum of Art, Phillips, Poly Auction, Rijksmuseum, The Smithsonian, Sotheby's, Victoria and Albert Museum, Woolley & Wallis, and Wright.

The final, edited dataset is approximately 38,000 high-quality images. However, due to the amount of variation in vases, I think it would be better to use a larger dataset of perhaps 100k images. Unfortunately I couldn't think of any more museums and auction houses with large collections. If you are aware of other sources for high-quality images of vases (or even other vessels), please contact me.

Running StyleGAN

I ended up running StylGAN multiple times- first at 512x512px just to test the system, then at 1024x1024px. As noted in gwern's guide, perhaps the most important and time-consuming part of the process is obtaining a large, high-quality, clean dataset. Due to various issues and overlooked complications with the data, I ended up having to completely re-run the 1024px model after manually combing through the images and removing as much junk as I could.

I initially ran StyleGAN on an 8 vCPU, 30GB RAM Jupyter Notebook (CUDA 10.0) instance with a single NVIDIA Tesla P100 hosted on Google Cloud's AI Platform. Once the resolution reached 1024x1024 and iterations started taking more time (approximately 2 hours between ticks), I stopped the VM and reconfigured it to use dual NVIDIA Tesla P100 GPU's. This configuration costs more but effectively halves the amount of time needed.

Before following the StyleGAN guide at Making Anime Faces With StyleGAN, I needed to upgrade Python to version 3.6.x (required for StyleGAN).

As discussed in the post Training at Home, and in the Cloud, training a StyleGAN model from scratch is time-consuming and expensive. Once I reached 9000 kimg, I was reaching my budget limit and still needed enough computation time to generate samples. Also, from 8500-9000 kimg I noticed that progress had drastically slowed, and I was getting the "elephant wrinkles" that gwern describes. Rather than keep going, I hope to acquire a larger, cleaner dataset at a later date and try again.

For those of you who want to try generating samples or transfer learning, the resulting model at 8980 kimg is here: network-snapshot-008980.pkl

I'm not sure how to share the actual collection of originals due to copyright and size issues. The unique .tfrecord format datasets generated from the original images to be used by StyleGAN is over 150G in size.

Generated Samples

The “truncation trick” with 10 random vessels with 𝜓 range: 1, 0.8, 0.6, 0.4, 0.2, 0, -0.2, -0.4, -0.6, -0.8, -1. As gwern notes this illustrates "the tradeoff between diversity & quality, and the global average". The "global average" vessel forms the middle column of each image grid.

𝜓 range: 1, 0.8, 0.6, 0.4, 0.2, 0, -0.2, -0.4, -0.6, -0.8, -1

𝜓 range: 1, 0.8, 0.6, 0.4, 0.2, 0, -0.2, -0.4, -0.6, -0.8, -1

Qinghua (Blue & White)

Interested to see the effect of using a more limited dataset to train the model, I created a new collection of images that only included Chinese Blue & White (qinghua) vessels. (It's possible that some Dutch Delftware and Japanese Arita-ware snuck in.) This new set was much smaller, only around 2,800 images. Using transfer learning, I started the StyleGAN software with the original vessels .pkl model (network-snapshot-008980.pkl) and trained against the new, limited dataset of only Blue & White. After just one round of training I was already getting very good results, but I kept the training going for 10 rounds until the next network snapshot .pkl file was written. Then, using the new network snapshot I generated images and videos.

The Blue & White model at 8980 iterations is here: network-snapshot-009180.pkl

𝜓 range: 1, 0.8, 0.6, 0.4, 0.2, 0, -0.2, -0.4, -0.6, -0.8, -1

𝜓 range: 1, 0.8, 0.6, 0.4, 0.2, 0, -0.2, -0.4, -0.6, -0.8, -1

Diverse

gwern's article describes the 𝜓/“truncation trick”, which is an important, adjustable parameter for generating images with StyleGAN. While most of the images on this website have 𝜓 set to 0.6 (which gives reasonable if boring results), more diverse and distorted images can be generated with 𝜓 at higher numbers. The "Diverse" section of this website showcases images generated with 𝜓 set at either 1.0 or 1.2. Although there are more artifacts and unrealistic-looking results, many of the images are more interesting for their artistic possibilities and unusual combination of influences. At times these more diverse images achieve a nostalgic, dreamlike, and painterly quality that I find very interesting.

Some favorite results from the diverse set.

Some favorite results from the diverse qinghua set.